Outcome

By finishing this part, we can set up the local Kubernetes cluster, deploy our gRPC service with Envoy, and automatically update those two later when any code is changed.

What is Kubernetes?

Kubernetes or k8s is a container orchestration system built to automate deployments, scale and manage containerized applications.

We have containerized gRPC service to deploy it into the k8s cluster, scale it, manage resources, etc. From the developers’ point of view, k8s is a universal environment for their applications. It doesn’t matter where the cluster deployed itself, in GCP, AWS, Azure, or localhost. We will use the last one, but the example, with minimal changes, will work in clouds too.

k8s on localhost

The easiest way, at least for myself, to run k8s locally is to use minikube.

It’s a virtual machine, which runs k8s inside. There are several other similar implementations, but I chose this one because it was effortless to start.

To prepare minikube for work, we need to install it, see instruction by the link above and run it with:

% minikube start

😄 minikube v1.23.2 on Arch

✨ Automatically selected the docker driver

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

💾 Downloading Kubernetes v1.22.2 preload ...

> preloaded-images-k8s-v13-v1...: 511.84 MiB / 511.84 MiB 100.00% 15.07 Mi

> gcr.io/k8s-minikube/kicbase: 355.39 MiB / 355.40 MiB 100.00% 10.15 MiB p

🔥 Creating docker container (CPUs=2, Memory=5900MB) ...

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.8 ...

▪ Generating certificates and keys ...

▪ Booting up control plane ...

▪ Configuring RBAC rules ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

It downloads, installs, and runs the latest k8s version (it’s also possible to specify the version). Our cluster is ready:

% kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

minikube Ready control-plane,master 7m45s v1.22.2 192.168.49.2 <none> Ubuntu 20.04.2 LTS 5.14.8-arch1-1 docker://20.10.8

What is Tilt?

Tilt is a tool that helps developers to synchronize their code into the k8s cluster and manage k8s objects. It watches for files and applies changes to applications already run in k8s. Also, it has great UI, even both: terminal-based, for people like me, who spent most of the time in a terminal, and web-based. The last one is more powerful than the first one.

Tilt uses Starlark language for configuration, as Bazel does, but those Starlark versions are slightly different.

Bazel again

We build everything with Bazel, except k8s and minikube. We assume those two are already installed and configured.

We need a couple of things for each component of our system to deploy it into the k8s cluster, namely:

- Docker image

- k8s deployment definition

- k8s service definition

And Bazel makes it with :yaml targets. We’ll list them in a moment, and now

let me define a build flow.

Tilt and Bazel: the flow

Tilt is a great tool. It can run go build, build Docker images, deploy them

into the k8s cluster, etc. But we use Bazel, which does the same. So, we will

not use that part of Tilt. Instead, we call Bazel from inside Tilt. In this case,

we have a clear separation of duties. Bazel builds binaries, creates images, and

produces k8s manifests, while Tilt synchronizes them into the k8s cluster.

You can find integration between Tilt and Bazel in bazel.Tiltfile, Tilt’s main config is a Tiltfile in a project root.

Run Tilt with Bazel

Tilt is a tool written in Go, which means Bazel can download and run it. It’s also possible to build a tool from scratch, as Bazel does it with protobuf, but it requires writing additional rules. On the other side, Tilt has a set of pre-built binaries on the release page, so we just downloaded the necessary one. There are binaries for different operating systems. We pick two Linux and macOS. Depending on OS, we download the correct version.

We get one more command in our toolset, which Bazel manages. Reasons to build and run tools such a way are the same:

- to have a unified interface for everything

- lock tools’ versions

- do not install tools separately.

WORKSPACE file

WORKSPACE is a special file. It determines the project root for Bazel and contains commands for downloading rules and dependencies, some settings for toolchains, and so on. See documentation for details.

In this file we have the following things:

# WORKSPACE

TILT_VERSION = "0.31.2"

TILT_ARCH = "x86_64"

TILT_URL = "https://github.com/windmilleng/tilt/releases/download/v{VER}/tilt.{VER}.{OS}.{ARCH}.tar.gz"

http_archive(

name = "tilt_linux_x86_64",

build_file_content = "exports_files(['tilt'])",

sha256 = "9ec1a219393367fc41dd5f5f1083f60d6e9839e5821b8170c26481bf6b19dc7e",

urls = [TILT_URL.format(

ARCH = TILT_ARCH,

OS = "linux",

VER = TILT_VERSION,

)],

)

http_archive(

name = "tilt_darwin_x86_64",

build_file_content = "exports_files(['tilt'])",

sha256 = "ade0877e72f56a089377e517efc86369220ac7b6a81f078e8e99a9c29aa244eb",

urls = [TILT_URL.format(

ARCH = TILT_ARCH,

OS = "mac",

VER = TILT_VERSION,

)],

)

We lock Tilt’s version and checksum, as well as two different targets, one for each OS.

tools/BUILD file

In tool directory, we have a BUILD file with following targets:

sh_binary(

name = "tilt-up",

srcs = ["wrapper.sh"],

args = [

"tilt",

"up",

"--legacy=true",

],

data = select({

"@bazel_tools//src/conditions:darwin": ["@tilt_darwin_x86_64//:tilt"],

"//conditions:default": ["@tilt_linux_x86_64//:tilt"],

}),

)

sh_binary(

name = "tilt-down",

srcs = ["wrapper.sh"],

args = [

"tilt",

"down",

"--delete-namespaces",

],

data = select({

"@bazel_tools//src/conditions:darwin": ["@tilt_darwin_x86_64//:tilt"],

"//conditions:default": ["@tilt_linux_x86_64//:tilt"],

}),

)

Each of which calls bash script wrapper.sh (we’ll look at it in a moment),

with actual binary and its parameters. These targets determine which OS to

download the binary for, with data param. It has a

select

switch, which knows, which target to call depending on OS.

wrapper.sh

It’s a simple script. Based on $OSTYPE values, it sets an actual path to the

downloaded binary within the Bazel cache and runs the binary.

#!/bin/bash

set -euo pipefail

TOOL=$1

shift

if [[ "$OSTYPE" == "darwin"* ]]; then

realpath() {

[[ $1 = /* ]] && echo "$1" || echo "$PWD/${1#./}"

}

TOOL_PATH="$(realpath "external/${TOOL}_darwin_x86_64/${TOOL}")"

else

TOOL_PATH="external/${TOOL}_linux_x86_64/${TOOL}"

fi

TOOL_PATH="$(realpath "${TOOL_PATH}")"

cd "$BUILD_WORKSPACE_DIRECTORY"

exec "${TOOL_PATH}" "$@"

K8s objects: namespace, deployments, and services

To deploy our gRPC service and Envoy into the k8s cluster, we need several YAML files. K8s uses it for creating its cluster objects. We use Bazel and call it from inside the Tilt configuration script. In other words, we have to produce those YAML files with Bazel, like this:

% bazel run //k8s:ns

...bazel info skipped...

apiVersion: v1

kind: Namespace

metadata:

name: bazel-k8s-envoy

:yaml targets

For each of our microservices we have a :yaml target. Under-the-hood it uses

Carvel Ytt. It’s another great tool in my collection

now. Before, I used rules_k8s, but

at some point I needed more flexibility with templating, so I’ve decided to

look for something new.

I’ve tried kustomize, but a) it supports native k8s objects only, while I had to work with non-native object and b) I was looking for templating and overlaying all together. Fortunately, I found Ytt, which covers my needs.

Here is our rule:

genrule(

name = "yaml",

srcs = [

"//k8s:defaults.yaml",

"//k8s/base:service.yaml",

":values.yaml",

],

outs = ["manifest.yaml"],

cmd = """

echo $(location @com_github_vmware_tanzu_carvel_ytt//cmd/ytt) \

`ls $(SRCS) | sed 's/^/-f /g'` \

> $@

""",

executable = True,

tools = [

"@com_github_vmware_tanzu_carvel_ytt//cmd/ytt",

],

)

Which is equivalent to the following call:

% ytt \

-f k8s/defaults.yaml \

-f k8s/base/services.yaml \

-f values.yaml

where:

k8s/defaults.yaml- defines a schema i (allowable values to use) along with their default values.k8s/base/services.yamlis a main template.values.yamlis an unique data values for each service.

For more examples of the tool, see ytt playground.

Bazel’s genrule allows to

run user-defined Bash command, like from above, plus we can rely on pinned

ytt dependency, which will be automatically build and run when we’ll call

:yaml target.

:yaml targets with rules_ytt

Recently we’ve open-sourced a ruleset

eBay/rules_ytt, a wrapper for the Ytt

tool. With a new ruleset, our :yaml target becomes more clear:

load("@com_github_ebay_rules_ytt//:def.bzl", "ytt")

ytt(

name = "yaml",

srcs = [

"//k8s:defaults.yaml",

"//k8s/base:service.yaml",

":values.yaml",

],

image = ":image.digest",

)

It’s almost the same as the genrule above. The difference is in the image

property, which accepts :image.digest label, which returns a digest for a

Docker image. Here is how we can use it:

diff --git a/k8s/base/service.yaml b/k8s/base/service.yaml

index 5216767..85040e2 100644

--- a/k8s/base/service.yaml

+++ b/k8s/base/service.yaml

@@ -19,7 +19,7 @@ spec:

spec:

containers:

- name: #@ data.values.app_name

- image: #@ "bazel/%s:image" % data.values.app_name

+ image: #@ "bazel/{}@{}".format(data.values.app_name, data.values.image_digest)

ports:

- containerPort: 5000

resources:

(

And in the result manifest, it will look like this:

containers:

- name: service-one

image: bazel/service-one@sha256:86850d756c557c789ae62decc08ab65da07ff2b3a5fd91c36714a2ba8d1ee4af

ports:

- containerPort: 5000

That’s all we need to deploy our service into the k8s cluster.

% bazel run //tools:tilt-up

This command runs Tilt, which creates namespace, deployment, and service and

starts watch for files. We deploy everything into the dedicated namespace

bazel-k8s-envoy.

Let’s check it there:

% kubectl get ns | grep bazel

bazel-k8s-envoy Active 21s

% kubectl get deploy,po,svc

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/authz 1/1 1 1 64s

deployment.apps/envoy 1/1 1 1 63s

deployment.apps/service-one 1/1 1 1 63s

NAME READY STATUS RESTARTS AGE

pod/authz-94cfcdb8b-6sqzs 1/1 Running 0 64s

pod/envoy-8b4d95588-2v98b 1/1 Running 0 63s

pod/service-one-bc4bf85d7-5blz9 1/1 Running 0 63s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/authz ClusterIP None <none> 5000/TCP 64s

service/envoy ClusterIP None <none> 8080/TCP,8081/TCP 63s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h28m

service/service-one ClusterIP None <none> 5000/TCP 63s

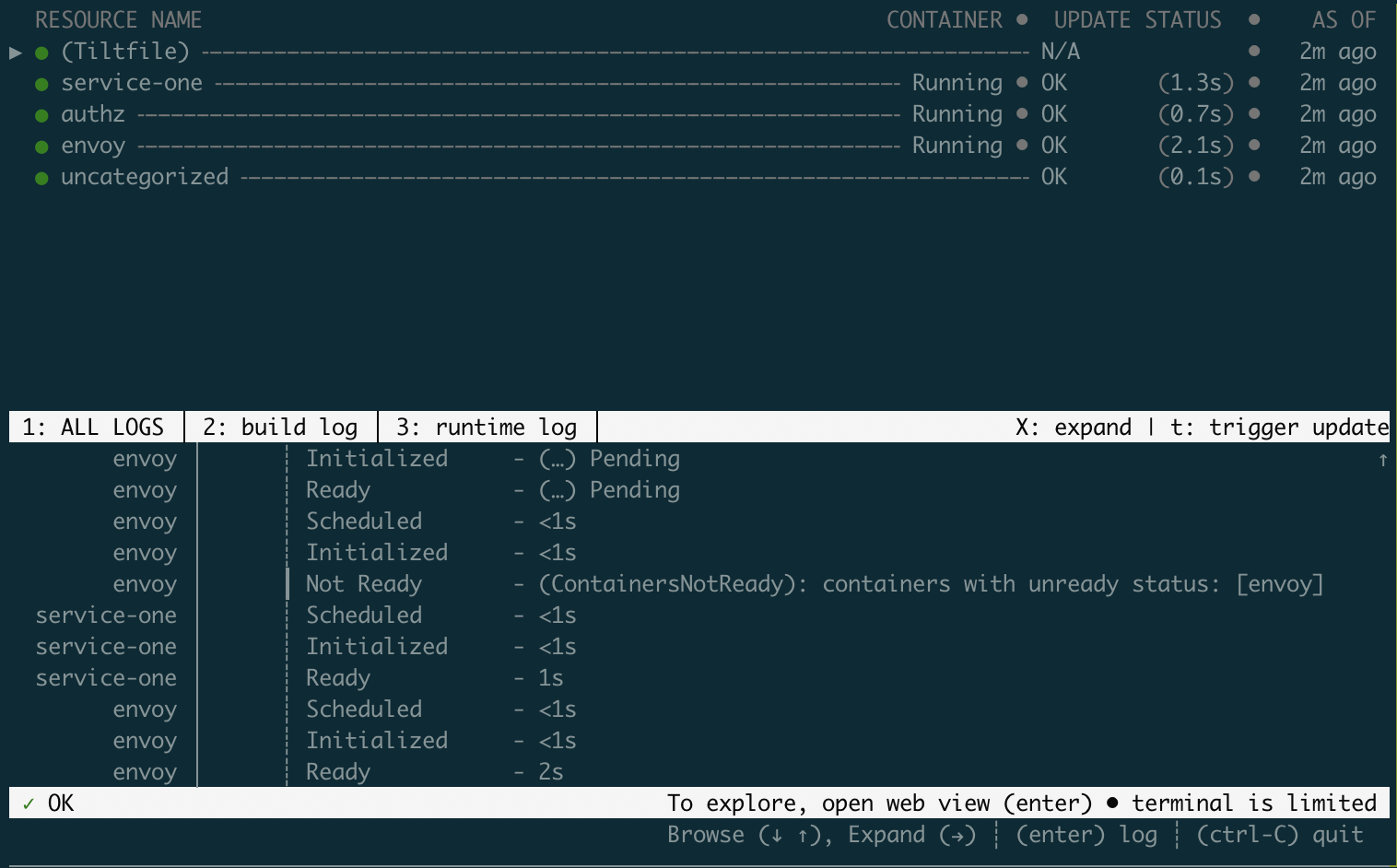

And this is how Tilt console looks like:

By hitting the Enter button, you can open the Tilt web console on http://localhost:10350, which has more features, like restarting pods, changing trigger modes, viewing and filtering logs so on.

When you don’t need Tilt anymore, press Ctrl-C to stop the console and run:

% bazel run //tools:tilt-down

It deletes all deployed objects from the cluster and the namespace itself.

Have fun with k8s now!